GitLab CI Pipeline for Go Projects

After recently learning Go, and about to start my first project, I started putting together a GitLab CI pipeline for Go projects. After scouring the Internet, I found mostly old posts on the subject. The posted pipelines, as well as numerous actual Go project pipelines, were missing jobs in my set of goals for a CI pipeline. So I built this GitLab CI pipeline for Go projects.

Pipeline goals #

This adventure started with my first Go project, and wanting, but not finding a pipeline that met the goals that I have for just about any pipeline:

- The pipeline should include all checks required to confirm the software is in a deployable state, and should generate any reporting required when deploying the software.

- The pipeline jobs should use

ruleslogic to include or exclude jobs or settings where an expectation is implied. For example, it should analyze dependencies if the project includes dependencies (if ago.sumfile exists), or deploy binaries if binaries are built, but should exclude those jobs where they are not applicable. - The pipeline should allow common configuration options through

variables(globally if they effect multiple jobs to avoid duplication). - The pipeline should automate deployment when the project should be deployed to ensure consistency.

- The pipeline jobs should provide

reportsto leverage GitLab capabilities, where available. For example,code_quality,junit, andcoveragereports. - The pipeline should be optimized to execute as many jobs as possible in parallel with clearly defined job dependencies, and should minimize repeating the same tasks in favor of performing them once and passing the artifacts as required.

The pipeline detailed here does not include common jobs that fall under the previously defined goals, but are already available generically in my templates, which includes:

- Code quality analysis for common languages (excluding Go) that are typically included in a GitLab project. For example linting markdown, linting YAML, linting shell scripts, counting code by language.

- Code security analysis including Static Application Security Testing (SAST) and secret detection.

- Supply chain security analysis including several flavors of dependency vulnerability scanning and Socket dependency analysis.

- Jobs to build, test, and deploy container images, which are not unique to Go.

- Jobs to automatically create a GitLab release.

GitLab pipeline for Go projects #

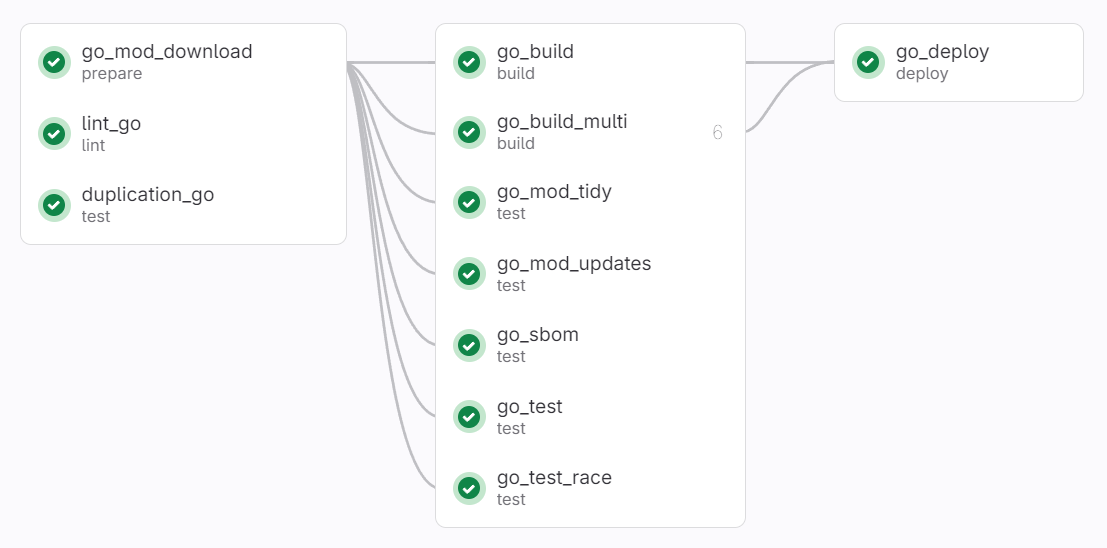

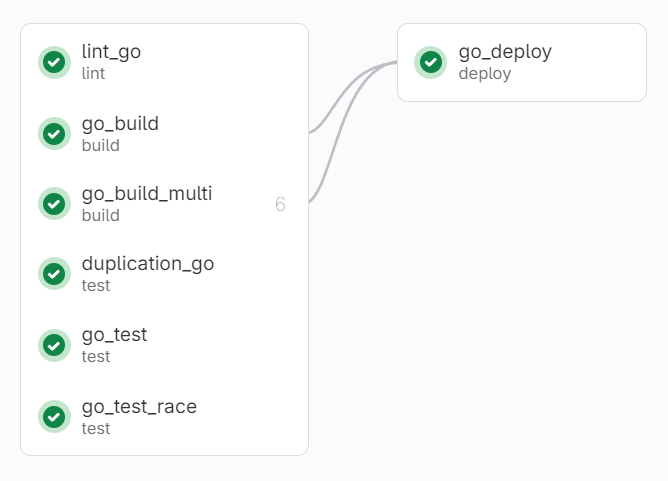

The complete CI pipeline for Go projects is shown below, ordered by execution sequence with job dependencies shown. The first is for a Go project with dependencies.

The second is the same pipeline showing how it adapts if the project has no dependencies.

The details of all jobs are discussed in the subsequent sections, which assumes a working knowledge of both Go and GitLab CI. The included CI jobs have some simplifications for this post where specific aspects are not relevant for this introduction. Each section also includes a link to the complete job in my templates project for reference.

Global Go template #

To establish commonality across all of the jobs running Go code, a

.go

template was created with several global settings:

- Define a common

imageso jobs are all running on the same version of Go. - Set

GOPATHto$CI_PROJECT_DIR/.goso that it's within the project directory. This allows saving its contents as artifacts and/or cache (which must be within the project directory), and establishes a common directory for these jobs. - Set

GO_BIN_DIRto a common location where all binaries are saved to and retrieved from.

.go:

image: golang:1.21.6-alpine

variables:

GOPATH: $CI_PROJECT_DIR/.go

GO_BIN_DIR: binSome jobs require changes to these settings, and those are specifically identified below.

Note: one impact to resetting GOPATH is that becomes the location for any

go installed modules, which do install nominally, but that directory is not

included in PATH. So, those modules either need to be executed from

$GOPATH/bin or $GOPATH/bin needs to be added to PATH before executing.

Download dependencies #

It's a common expectation today for applications to have external dependencies,

and sometimes there are many, either direct or transitive. These dependencies

are required in multiple subsequent jobs, so if there are any (that is, if a

go.sum file exists), then they're downloaded first in the

go_mod_download

job and saved as artifacts for use in those subsequent jobs. This not only saves

time in subsequent jobs, but more importantly ensures that all jobs are using

the same versions. They're also saved in a project-wide cache to reduce download

times on subsequent pipelines.

go_mod_download:

extends:

- .go

needs: []

rules:

- exists:

- '**/go.sum'

before_script:

# Required since this is set as GOPATH

- mkdir -p .go

script:

- go mod download

cache:

key: ${CI_PROJECT_NAME}-go

paths:

- .go/pkg/mod/

artifacts:

paths:

- .go/pkg/mod/This assumes that the project is not

vendoring dependencies. Despite the fact the

Go includes a command to specifically do it (go mod vendor), I believe

vendoring promotes bad configuration management practices, potential licensing

concerns, and is not recommended so it is not accounted for.

Linting #

Linting is accomplished via the lint_go job using

golangci-lint, which integrates

dozens of Go linting apps, running them with concurrent execution (of course,

it's a Go app). A YAML file (.golangci.yml) can be used to configure the

enabled linters, settings for each linter, etc. The job is setup to use the

.golangci.yml in the project root if one exists, otherwise a default file is

retrieved from a URL (which can be overridden via a variable, as shown in the

example). The results are saved as a GitLab Code Quality JSON report uploaded to

GitLab so they're available in the Code Quality merge request widget. A summary

is also output to the job log.

lint_go:

extends:

- .go

image: golangci/golangci-lint:v1.55.2-alpine

needs: []

variables:

CONFIG_FILE_LINK: https://gitlab.com/gitlab-ci-utils/config-files/-/raw/10.1.1/Linters/.golangci.yml

rules:

- exists:

- '**/*.go'

before_script:

- apk add jq

# Use default .golangci.yml file if one does not exist

- if [ ! -f .golangci.yml ]; then (wget $CONFIG_FILE_LINK) fi

script:

# Write the code coverage report to gl-code-quality-report.json

# and print linting issues to stdout in the format: path/to/file:line description

- >

golangci-lint run --out-format code-climate $LINT_GO_CLI_ARGS |

tee gl-code-quality-report.json |

jq -r '.[] | "\(.location.path):\(.location.lines.begin) \(.description)"'

artifacts:

reports:

codequality: gl-code-quality-report.json

paths:

- gl-code-quality-report.json

allow_failure: trueDuplication #

The

duplication_go

job looks for duplicate code across Go files. The default duplication threshold

is 50 tokens, but can be adjusted as desired via a variable. The job also

defaults to checking all *.go files, but excluding all *_test.go files,

since test code tends to have a lot of duplicate boilerplate code. This is done

by creating a list of files to check, which is done in before_script so it can

be easily overridden without impacting other job logic, and that listing is

passed as a variable. The results from this job are also saved as a GitLab Code

Quality JSON report uploaded to GitLab so they're available in the Code Quality

merge request widget. Full details on this job can be found in the

GitLab PMD CPD project,

which are beyond the scope of this post.

duplication_go:

image:

name: registry.gitlab.com/gitlab-ci-utils/gitlab-pmd-cpd:latest

entrypoint: ['']

needs: []

variables:

PMDCPD_MIN_TOKENS: 50

PMDCPD_LANGUAGE: 'go'

PMDCPD_DIR_FILES: '--file-list=go_files.txt'

PMDCPD_RESULTS_BASE: 'pmd-cpd-results-go'

PMDCPD_RESULTS: '${PMDCPD_RESULTS_BASE}.xml'

rules:

- exists:

- '**/*.go'

before_script:

- find . -type f -name "*.go" ! -name "*_test.go" > go_files.txt

script:

- /gitlab-pmd-cpd/pmd-cpd.sh

artifacts:

paths:

- ${PMDCPD_RESULTS_BASE}.*

reports:

codequality: ${PMDCPD_RESULTS_BASE}.jsonBuild #

There are two build jobs, both optional, and either one or both can be included

via a variable. These jobs specifically build binaries, so if the build is only

in a Dockerfile, for example, they may not be required. If either job is

included, the job needs are set to the go_mod_download job to use those

downloaded dependencies.

Build current operating system and architecture #

The

go_build

job performs a build with only the current OS/architecture (Linux/amd64 in the

given container) and saves the binary as an artifact, with the binary name and

main package directory specified via variables.

go_build:

extends:

- .go

needs:

- job: go_mod_download

optional: true

rules:

- if: $GO_BUILD_CURRENT

variables:

GO_BUILD_NAME: $CI_PROJECT_NAME

GO_MAIN_DIR: '.'

script:

- go build $GO_BUILD_ARGS -o ./${GO_BIN_DIR}/${GO_BUILD_NAME} $GO_MAIN_DIR

artifacts:

paths:

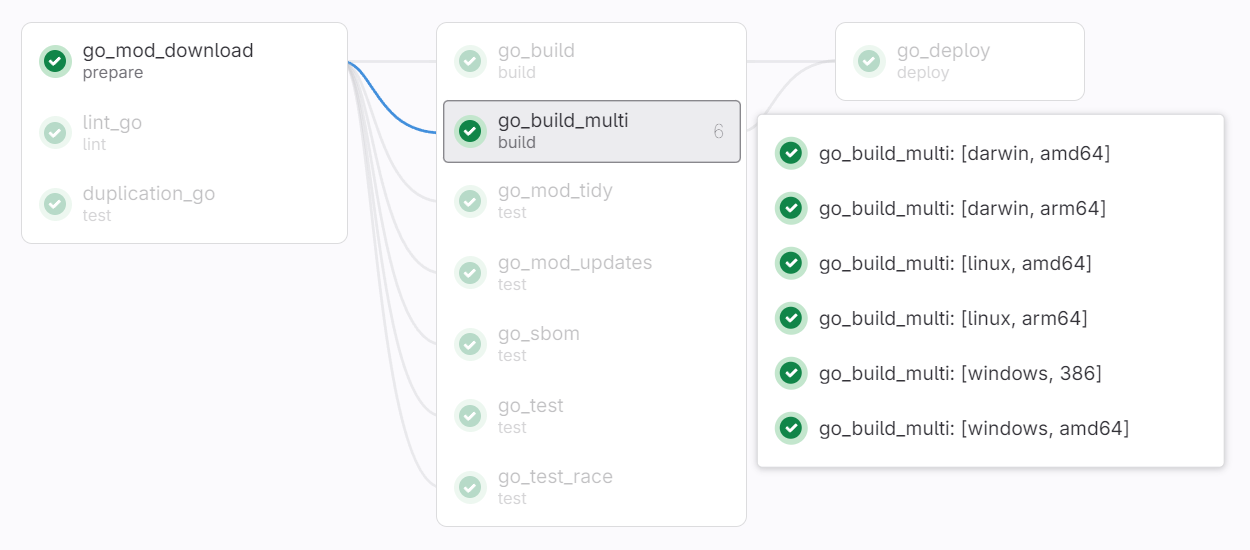

- ./${GO_BIN_DIR}/${GO_BUILD_NAME}*Build multiple operating systems and architectures #

The

go_build_multi

job also performs a build, but in a parallel matrix job for a variety of

OS/architecture combinations, all of which are saved as artifacts with the

OS/architecture appended to the binary name. If the OS is Windows, the job adds

the .exe extension to the binary name. This can be overridden as necessary.

go_build_multi:

extends:

- .go

needs:

- job: go_mod_download

optional: true

rules:

- if: $GO_BUILD_MULTI

variables:

GO_BUILD_NAME: $CI_PROJECT_NAME

GO_MAIN_DIR: '.'

parallel:

matrix:

- TARGET_OS: 'darwin'

TARGET_ARCH: ['amd64', 'arm64']

- TARGET_OS: 'linux'

TARGET_ARCH: ['amd64', 'arm64']

- TARGET_OS: 'windows'

TARGET_ARCH: ['386', 'amd64']

script:

- if [ $TARGET_OS == 'windows' ]; then export EXTENSION='.exe'; fi

- >

env GOOS=${TARGET_OS} GOARCH=${TARGET_ARCH}

go build $GO_BUILD_ARGS -o ./${GO_BIN_DIR}/${GO_BUILD_NAME}_${TARGET_OS}_${TARGET_ARCH}${EXTENSION} $GO_MAIN_DIR

artifacts:

paths:

- ./${GO_BIN_DIR}/${GO_BUILD_NAME}*The parallel matrix runs all jobs in parallel for efficiency.

Unit and integration testing #

There are two test jobs, both of which run in a custom

container image.

This image is based on the golang image, with two sets of pre-installed

dependencies:

- Tools to generate JUnit formatted test results and Cobertura coverage reports.

- OS dependencies including

gccthat are required to run with-race, which requiresCgo.

There is a

.go_test

template that specifies the version of this image (which also extends the

.go template), and all test jobs extend it rather than the .go template.

.go_test:

extends:

- .go

image: registry.gitlab.com/gitlab-ci-utils/container-images/go-test:2.0.1

artifacts:

when: alwaysAs with the build jobs, if there are dependencies each test job needs the

go_mod_download job.

Tests with code coverage #

The

go_test

job runs all tests, with code coverage, and processes the results generating

several additional reports for ingestion by GitLab and reviewing pipeline

results.

All tests are run, generating coverage information for all packages. Well, the

goal is all packages, but there's an

open issue on Go not including

coverage data for packages with no tests. The workaround used here is to use the

-coverpkg argument to specify package to include in the coverage data. This is

specified through the variable GO_COVER_PACKAGES. Ideally this could simply be

set to ./..., but this is one of the places where redirecting GOPATH has an

impact. Since it is within the current working directory, then ./... includes

all the project dependencies. So, GO_COVER_PACKAGES is specified by using

go list ./..., which only includes packages in the current module, then

converted to a comma delimited list, which -coverpkg requires. This is done in

before_script so it can be easily overridden without impacting other test

logic.

before_script:

# Get list of packages, replace newline with comma, and remove training comma.

- export GO_COVER_PACKAGES=$(go list ./... | tr '\n' ',' | sed 's/,$//')Even with this, there's still one quirk, as noted in the linked Go issue -

packages with no tests are not included in the coverage data. The best

workaround seems to be creating a test file with just the package declaration

(for example package foo_test), then the coverage is included as zero percent

for all functions in that package (for foo in this example). The fix for the

original Go issue is merged and scheduled for Go 1.22.

When the tests are run, in addition to generating coverage data the test output

is also saved to a file (tests.txt) that is used for reporting. Once

completed, the go tool cover command is used to output a summary of coverage

information by function, and the total coverage is also collected by GitLab.

script:

- go test -v -coverpkg="$GO_COVER_PACKAGES" -coverprofile=coverage.out -covermode=count ./... | tee `tests.txt`

- go tool cover -func coverage.outThe various test summary reports are generated in after_script, which ensures

that errors generating reports do not fail the job. This includes:

- Converting the coverage data to a Cobertura coverage report, ingested by GitLab. This allows coverage data to be visible in the merge request diff view.

- Converting the coverage data to an HTML coverage report, which is saved as an artifact.

- Converting the test output to a JUnit report, ingested by GitLab. This allows the test summary to be presented in a GitLab merge request widget.

This results in the following job configuration:

go_test:

extends:

- .go_test

needs:

- job: go_mod_download

optional: true

before_script:

# Get list of packages, replace newline with comma, and remove training comma.

# If `./...` is used, coverage includes packages in GOPATH since set within

# the cwd.

# Done in before_script so it can be easily overridden.

- export GO_COVER_PACKAGES=$(go list ./... | tr '\n' ',' | sed 's/,$//')

script:

- go test -v -coverpkg="$GO_COVER_PACKAGES" -coverprofile=coverage.out -covermode=count ./... | tee tests.txt

- go tool cover -func coverage.out

after_script:

# Generate Cobertura coverage report for GitLab

- gocover-cobertura -by-files < coverage.out > coverage.xml

# Generate HTML coverage report

- go tool cover -html coverage.out -o coverage.html

# Generate JUnit report for GitLab

- go-junit-report < tests.txt > junit.xml

coverage: '/total:\s+\(statements\)\s+\d+.\d+%/'

artifacts:

expose_as: 'Go Test Coverage Report'

paths:

- coverage.html

reports:

coverage_report:

coverage_format: cobertura

path: coverage.xml

junit: junit.xmlTest race conditions #

The

go_test_race

job runs all tests with the -race flag to check for race conditions. It is

configured with CGO_ENABLED, as required to use -race. No artifacts are

saved as they are all covered with the go_test job.

go_test_race:

extends:

- .go_test

needs:

- job: go_mod_download

optional: true

variables:

# Cgo is required for -race.

CGO_ENABLED: 1

script:

- go test ./... -raceIf tests with -race run slowly on gitlab.com runners, consider using more

capable runners. By observation (not testing), this job is CPU bound and appears

to scale roughly linearly with CPU (that is, doubling CPU cuts the execution

time in half), at least through the large runners. See GitLab SaaS runner

details in

the docs.

Analysis jobs #

There are several application analysis jobs performed under the umbrella of confirming the software is in a deployable state and reporting required when deploying the software.

Missing or extraneous dependencies #

The

go_mod_tidy

job identifies whether the go mod tidy command produces any changes,

functionally identifying any missing or extraneous dependencies in the project

which can, and should, be fixed with go mod tidy. This job only runs if a

go.sum file exists, and if there are dependencies needs the

go_mod_download job.

go_mod_tidy:

extends:

- .go

needs:

- job: go_mod_download

optional: true

rules:

- exists:

- '**/go.sum'

script:

- |

# Check if go mod tidy produces any changes

if [ ! -f "go.mod" ]; then echo "go.mod file not found" && exit 2; fi

cp go.mod go.mod.before

cp go.sum go.sum.before

go mod tidy

diff go.mod.before go.mod && diff go.sum.before go.sumDependency updates #

The

go_mod_updates

job identifies any potential updates in direct dependencies. This goes a step

beyond the core Go command go list -m -u, which identifies potential updates

in any dependencies - direct or transitive. Updates to transitive dependencies

can be out of the project's direct control for various reasons, so they are

ignored in this case to avoid unnecessary job failures. The job uses the

go list -m -u JSON results to find direct dependency updates, and fail any are

found. This job only runs if a go.sum file exists, and if there are

dependencies needs the go_mod_download job. It also allows failure since its

primary purpose is to identify the available dependency updates.

go_mod_updates:

extends:

- .go

needs:

- job: go_mod_download

optional: true

rules:

- exists:

- '**/go.sum'

allow_failure: true

before_script:

- apk add jq

script:

# Waiting on https://github.com/golang/go/issues/40364 to allow listing

# only direct dependencies so don't have to check JSON.

- |

# Check for available updates to any direct module dependencies

UPDATES=$(go list -u -m -json all | \

jq -c -r 'select(.Update != null and .Indirect == null) | "\(.Path) \(.Version) [\(.Update.Version)]"')

printf "$UPDATES\n"

if [ $(echo $UPDATES | wc -w) != "0" ]; then exit 1; fiNote there is an open issue to

allow go list to show only updates to direct dependencies, which will likely

be used instead once implemented.

Software Bill of Materials (SBOM) #

The

go_sbom

job generates a

CycloneDX formatted SBOM, which

is an industry standard format for reporting dependencies used by an

application. Not that the SBOM generation is slightly different for the type:

app, mod, or bin. See the documentation for complete details.

go_sbom:

image: !reference [.go, image]

variables:

SBOM_TYPE: 'app'

SBOM_ARGS: '-main=./'

needs:

- job: go_mod_download

optional: true

rules:

- exists:

- '**/go.sum'

before_script:

- go install github.com/CycloneDX/cyclonedx-gomod/cmd/cyclonedx-gomod@v1.4.1

script:

- cyclonedx-gomod $SBOM_TYPE $SBOM_ARGS -json=true -licenses=true -output=sbom.json -output-version=1.4 $SBOM_PATH

artifacts:

paths:

- sbom.jsonDeployment #

The

go_deploy

job runs on tag pipelines if either build job runs and publishes the binaries

to GitLab's

generic package registry

under the git tag. An example showing the package registry for GitLab's

release-cli tool can be seen

here (also the

inspiration for the job).

go_deploy:

extends:

- .go

image: alpine:latest

variables:

# See https://docs.gitlab.com/ee/user/packages/generic_packages/#publish-a-package-file

PACKAGE_REGISTRY_URL: '$CI_API_V4_URL/projects/$CI_PROJECT_ID/packages/generic/$CI_PROJECT_NAME'

needs:

- job: go_build

optional: true

- job: go_build_multi

optional: true

rules:

# Deploy if tag pipeline and at least one build job was executed

- if: ($GO_BUILD_CURRENT || $GO_BUILD_MULTI) && $CI_COMMIT_TAG

before_script:

- apk add curl

script:

- cd $GO_BIN_DIR

- |

for FILE in *; do

echo "Deploying: $FILE";

curl --header "JOB-TOKEN: ${CI_JOB_TOKEN}" --upload-file ${FILE} ${PACKAGE_REGISTRY_URL}/${CI_COMMIT_TAG}/${FILE};

doneIn the future this could be updated to publish to GitLab's Go proxy, but this is currently experimental, likely to be for the foreseeable future, and is disabled on gitlab.com.

Collection of CI jobs #

To simplify use of the pipeline, a

Go-Build-Test-Deploy

collection has been created, including all of the Go jobs. As previously

discussed, each job contains rules logic ensuring it only runs when

appropriate, so this allows an individual Go project's .gitlab-ci.yml file to

be the following, and all appropriate jobs are run.

include:

- project: 'gitlab-ci-utils/gitlab-ci-templates'

ref: 'main'

file:

- '/collections/Go-Build-Test-Deploy.gitlab-ci.yml'

# Optional variables to include the build and deploy jobs

variables:

GO_BUILD_CURRENT: 'true'

GO_BUILD_MULTI: 'true'Summary #

This post detailed a complete GitLab CI pipeline for Go projects, including dependency management, code analysis, code quality, build, test, and deployment. The pipeline is designed to be flexible, allowing jobs to be included or excluded as desired, and optimized for parallel execution. It also includes several reports to leverage GitLab capabilities for review of pipeline results.

Aaron Goldenthal

Aaron Goldenthal